(UPDATED May 2024) Now that we have discussed a full technology stack based on Neo4j (or other graph databases), and that we a design and implementation available from the open-source project BrainAnnex.org , what next? What shall we build on top?

Well, how about Full-Text Search?

Full-Text Searching/Indexing

The Brain Annex open-source project includes an implementation of a design that uses the convenient services of its Schema Layer, to provide indexing of word-based documents using Neo4j.

The python class FullTextIndexing (source code) provides the necessary methods, and it can parse both plain-text and HTML documents (for example, used in "formatted notes"); parsing of PDF files and other formats will be added at a later date.

No grammatical analysis (stemming or lemmatizing) is done on the text. However, a long list of common word ("stop words") that get stripped away, is used to substantially pare down the text into words that are useful for searching purposes.

For example:

Mr. Joe&sons

A Long–Term business! Find it at > (http://example.com/home)

Visit Joe's "NOW!"

pares down (also stripped of all HTML) into: ['mr', 'joe', 'sons', 'long', 'term', 'business', 'find', 'example', 'home', 'visit'] Several common terms got dropped from that list.

Of course, you could tweak that list of common terms, to better suit your own use case...

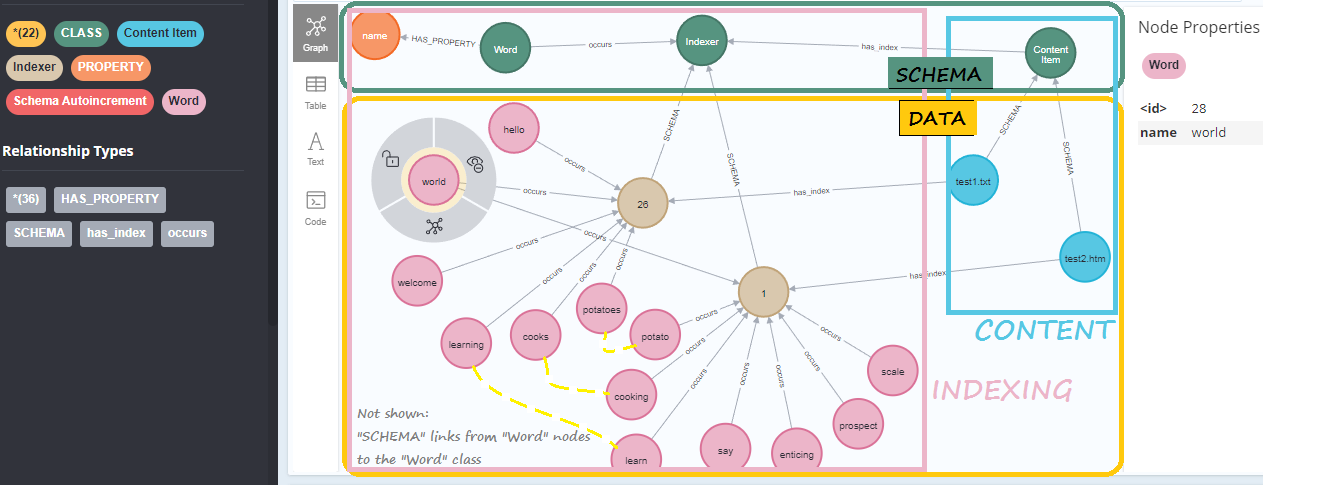

The diagram below shows an example of the Neo4j internal storage of the text indexing for two hypothetical documents, whose metadata is stored in the light-blue nodes on the right. Notice the division of the Neo4j nodes into separate "Schema" (large green box at the top) and "Data" (yellow) sections: this is conveniently managed by the Schema Layer library (as explained in part 5)

One document ("test1.txt"), which uses "Indexer" node 26, contains the exciting text:

"hello to the world !!! ? Welcome to learning how she cooks with potatoes..."

The indexed words appear as magenta circles attached to the brown circle labeled "26"; notice that many common words aren't indexed at all.

The "Indexer" Nodes

The "Indexer" Neo4j nodes (light-brown circles in the above diagram) may look un-necessary... and indeed are a design choice, not an absolute necessity.

You may wonder, why not directly link the "content metadata" nodes (light blue) to the "word" nodes (magenta)? Sure, we could do that - but then there would be lots of "occurs" relationships intruding into the "CONTENT" module (light blue box on the right.)

With this design, by contrast, all the indexing is managed within its own "INDEXING" module (large magenta box on the left) - and the only contact between the "CONTENT" and "INDEXING" sections are single "has_index" relationships : very modular and clean! The "content metadata" nodes (light-blue circles) only need to know their corresponding "Indexer" nodes - and nothing else; all the indexing remains secluded away from content.

Understanding It Better

A first tutorial is currently available as a Jupyter notebook.

It will guide you into creating a structure like one shown in the diagram in the previous section - and then perform full-text searches for words.

Be aware that it clears the databases; so, make sure to run it on a test database, or comment out the line db.empty_dbase()

The easiest way to run it, is probably to install the whole Brain Annex technology stack on your computer or VM (instructions), and then use an IDE such as PyCharm, to start up JupyterLab (you can use the convenient batch file "quick.bat" at the top level)... but bear in mind that the only dependencies are the NeoAccess and NeoSchema libraries; the rest of the stack isn't needed.Improving on It?

While the extraction of text from HTML is already part of the functionality ("formatted text notes" that use HTML have long been a staple of Brain Annex), the parsing of PDF files or Word documents, etc, remain on the to-do list.

Without grammatical analysis, related words such as "cooks" and "cooking", or "learn" and "learning", remain separate (yellow dashed lined added to the diagram earlier in this article.) Is this ugly internally for the database engineers to look at? Sure, it's ugly - and they might demand a pay raise for the emotional pain! BUT does it really matter to the users? I'd say, not really, as long as users are advised that it's best to search using word STEMS: for example, to search for "learn" rather than "learning" or "learns" - to catch all 3.

Can your users handle such directions? If yes, voila', you have a simple design that, while it won't win awards for cutting-edge innovation, is nonetheless infinitely better than not having full-text search!

Being an open-source project, this code (currently in late Beta stage) is something that you can of course just take and tweak it to your needs; maybe add some stemming or lemmatizing. Or use the general design ideas presented here, as a foundation for your own implementation - with or without the "Schema Layer" that comes with it.

May 2024 UPDATE: new releases of the Brain Annex technology stack now include indexing of the contents of PDF and EPUB documents (using the PyMuPDF library for parsing them.)

Performing Searches

With the word indexing in place, it's a relative breeze to carry out searches. For example, the UI of the Brain Annex open-source project (i.e., the web app that is the top layer in its technology stack) allows word searches thru all of the "formatted notes" and "plain-text documents" managed by it. [May 2024 UPDATE: the screenshot below is from an older versions; much more complex searches are now supported]

and here are the results of that search, which happens to locate some "formatted notes" (HTML documents) and a "plain-text document" (which contains the searched-for word in its body):IMPORTANT NOTE: multimedia knowledge management is just one use case of the Brain Annex technology stack, and comes packaged into the standard releases, currently in late Beta. You may also opt to use the lower layers of its technology stack for YOUR own use cases - which may well be totally different. The technology stack was discussed in part 6.

May 2024 UPDATE: searches are now much more sophisticated - and they can be limited to particular Categories and their descendant sub-categories (i.e. an "ontology" may be used to guide and restrict searches): details in this newly-release video.

Categories are a semantic layer, and have long been a centerpiece of the BrainAnnex project: "Category" is a high-level entity, with associated functions for UI display/edit, that is extensively used in the top layers of the Brain Annex software stack, to represent ordered "Collections" of "Content Items" - akin to the layout of paragraphs, sections and diagrams in a book chapter - to be discussed in future articles.

Future Directions

One final thought: once one has a good set of "word" nodes in a graph database, possibilities beckon about adding connections!

It might be as straightforward as creating "variant_of" relationships (perhaps an alternative to traditional stemming or lemmatizing)...

or it might be "related_to" relationships (perhaps aided by the import of a thesaurus database)...

Comments

Post a Comment